Powerful Meta large language model widely available online

A set of sophisticated large language models developed by Facebook parent company Meta — and intended to be accessed only by authorized researchers — were made available for download on Friday, releasing to the public the most powerful such AI model yet and increasing the likelihood that the technology might be misused.

Facebook first made the model in question — known as LLaMA — available last month and described it as an effort at “further democratizing access” to AI research. The company made the model — along with its corresponding weights that allow users to fine-tune the model for whatever purpose they wished — fully available but only to select researchers, which the company said would be approved on a case-by-case basis.

On Friday, a link to download the model was posted to 4chan and quickly proliferated across the internet. The model is now easily available for download via a variety of torrents — a pull request on the Facebook Research GitHub asks that a torrent link be added.

A Meta spokesperson said the company aims to share AI models like LLaMA with researchers to help evaluate them. “While the model is not accessible to all, and some have tried to circumvent the approval process, we believe the current release strategy allows us to balance responsibility and openness,” the spokesperson said.

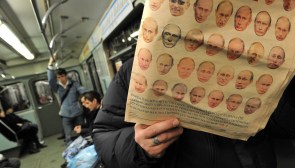

With its unintended release to the public, LLaMA is now the most powerful publicly available large language model, one that could conceivably be misused by sophisticated users. Fine-tuning LLaMA to to perform unintended and perhaps harmful tasks would require a fair amount of technical skill, but using the model to generate spam, marketing material or disinformation is eminently possible.

“The LLaMa leak highlights a growing challenge with large language models — transparency,” said Chris Meserole, who directs the Artificial Intelligence and Emerging Technology Initiative at the Brookings Institution. “We want them to be open enough that we can understand what the risks are, but not so open that those risks are easily exploited.”

“Meta tried to strike a better balance by opening access to registered researchers while restricting it to the public at large,” Meserole added. “But now it’s in the worst place of all: the model is still relatively closed to the public at large, but accessible to every malicious actor that seeks it out.”

LLaMA is in fact composed of four different models, differing by the amount of parameters they contain. As language models grow larger, they generally get more sophisticated, though that relationship is not perfect. LLaMA is available in 7, 13, 33, and 65 billion parameter versions. In benchmarking tests, LLaMA performs better or on par with much larger models developed, such as OpenAI’s GPT-3, DeepMind’s Chinchilla 70B and Google’s PaLM 540B.

In an example of LLaMA’s capabilities, the AI researcher Shawn Presser has been posting examples of the model’s uncanny ability to mimic Star Trek characters. Turning the model toward more nefarious use — whether trying to obtain instructions on how to build explosives or write malware — is merely a question of fine-tuning the technology that has now escaped from Meta’s control.

LLaMA’s release may also spur research and innovation, but for AI policy experts, the model’s release into the public represents a concerning development.

“Given the fact these models have broadly unknown capabilities, the more models are out there, the more you’re rolling the dice on someone discovering a genuinely dangerous feature in a widely distributed model,” Jack Clark, a co-founder of the AI company Anthropic, wrote in his newsletter on Monday. “Therefore, a lot of governance/policy conversations trend towards control — how can we somehow control the proliferation of models and also the computers on which these models are trained.”

While large language models have quickly advanced in recent years and captured the public imagination in the process, the thinking about how to control this technology and address its dangers has lagged behind. To mitigate the risks posed by large language models, companies like OpenAI have shed the “open” moniker in its name, and increasingly restricted access to its tools, like ChatGPT, via an online portal or API.

The relative openness of AI models has become a flashpoint in the industry, and Facebook’s thinking in releasing LLaMA fairly widely to approved researchers was that it aimed to strike a blow in favor of open-access research and make powerful language models available to more or less anyone, rather than just privileged researchers with relationships to companies like OpenAI, Google and Microsoft — the industry leaders.

With LLaMA’s release, experts like Clark are concerned that more such widely available models are coming — even as methods to assess safety concerns are advancing more slowly than the technology powering the models. “This represents a kind of ‘race to the bottom’ in terms of moving from maximal control to maximal diffusion of models,” Clark wrote in his Monday newsletter. “These incentives are powerful — Facebook is, after all, trying to exploit an ‘open access’ ecological niche to distinguish itself in an ecosystem.”