Is a more collaborative approach the answer to fighting global disinformation?

Disinformation is flooding online platforms at such a rate that it’s simply impossible for tech companies, content moderators or researchers to prevent every harmful post from spreading quickly online and resulting in real world consequences.

The problem is so overwhelming and complex that it requires social media platforms, government officials and other stakeholders to combine efforts, Graphika CEO John Kelly told CyberScoop in an interview Thursday.

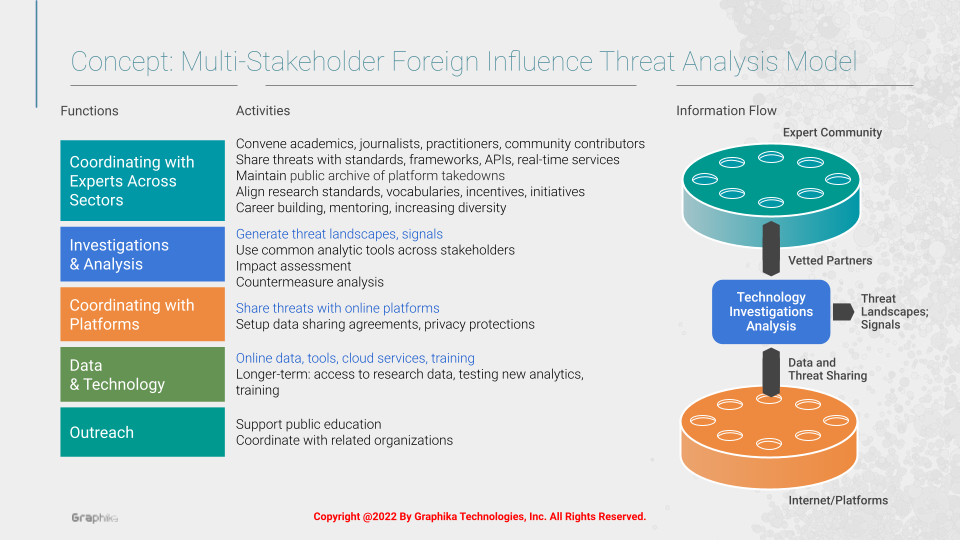

That’s why his social media monitoring firm is developing a blueprint for what it calls a software-based multistakeholder threat center that would track, share and analyze disinformation at scale with journalists, government officials, academics and other experts.

While the virtual threat center remains theoretical until funding materializes, Graphika’s leaders said they have discussed the concept with a variety of potential partners.

Highly regarded for its work identifying strategic influence campaigns online, Graphika says it uses artificial intelligence to uncover and analyze online communities and identify coordinated information operations. It recently collaborated with Facebook and other social media platforms to uncover a sprawling, nearly 5-year-long Russian-language, pro-U.S. information operation.

Kelly said he recognizes the dire need for a more expansive shared community to track the threats disinformation poses to society at large.

Graphika’s proposed center would function as a sort of one-stop-shop for monitoring and cataloging information operations, a model Kelly said is critical since many experts are siloed off from each other working on disparate efforts.

So far, the Graphika approach resembles other Information Sharing and Analysis Centers, or ISACs, designed to provide important threat information to specific industry stakeholders.

“It’s not just that we want to train everybody to be like a little Graphika,” Kelly said. “We want to provide technology tooling, data exchange, things like that as a layer and a model that then other folks can come in and bring what they may develop a very special expertise in … and then it’s valuable to everybody.”

Independent disinformation experts said a threat center along the lines of what Graphika envisions could be a critical tool for fighting a growing problem.

“It’s clear that there is a global need for a more networked and resourced counter-disinformation response,” said Kevin Sheives, associate director of The International Forum for Democratic Studies at the National Endowment for Democracy. He added that it is vital for civil society leaders to get a “clearer picture of their own information ecosystems, and especially on the impact of their own interventions.”

The Graphika model could bridge the gap between “research and practice” by improving the relationship between social media platforms and civil society researchers and disinformation experts, Sheives said.

“For Facebook especially, data transparency and true partnership among these two communities is needed in order to improve content moderation,” Sheives said.

Graphika has long worked with social media platforms to stamp out disinformation, a track record that could be leveraged by others participating in launching and running the threat center. Kelly said that many sophisticated information operations appear across platforms and because tracking these threats is costly and time-consuming platforms have historically welcomed support from Graphika’s experts.

In August, Graphika announced its findings in a joint investigation with platforms and Stanford University exposing a web of pro-Western information operations. It has uncovered several other major influence operations in recent years, including one linked to the Russian government known as Secondary Infektion that spanned six years and two continents.

Kelly said social media companies know they need help attacking the “sophisticated problem” of threat actors coordinating activities on platforms in a deceptive way. He said that in his experience platforms welcome assistance in tracking and exposing coordinated campaigns to manipulate the internet.

“That’s a higher-class problem,” Kelly said. “What a center like this can do is make the discovery of that higher level coordinated manipulation … authenticate it, validate it, find out as much about it as possible [and] turn that into the signals that the platforms need to take action and do the investigation on their own terms.”

Many social media platforms do not have the budget to track coordinated disinformation campaigns. Kelly said that Facebook and Google are well-funded, but Telegram, Discord and even Twitter have far less money to throw at the disinformation problem.

The multi-stakeholder threat center also could be a place where civil society leaders come together to align research goals, settle on common vocabulary and share examples of information operations in real time, Graphika Chief Technologist Jennifer Mathieu said when describing the model at the annual DEF CON hacker conference in August.

Graphika envisions the center working with social media platforms to gain access to more data than is currently available through application programming interfaces, Mathieu said. The data access she hopes for would meet terms of service to protect users but would grow “as we build trust across the community,” Mathieu said.

The threat center would only seek data about threat signals, historical archives on known, attributed disinformation campaigns and evidence of manipulation as opposed to secure user data that Kelly said is “rightfully protected” for privacy reasons.

To look at threats in near real time the center’s work would be automated and likely supported by human analysts. Graphika hopes to engage both big and small platforms as part of the effort.

“A number of people across various stakeholders have been talking about it for many years,” Mathieu told the DEF CON audience. “The challenge is funding.”