No wonder cybersecurity is so bad: There’s no way to measure it

When the founders of a new nonprofit assessing the cybersecurity of software for consumers were trying to develop a scoring system that would rate programs depending on which security features they used, they encountered a “mind-blowing” problem.

No one had ever measured how well such features actually worked.

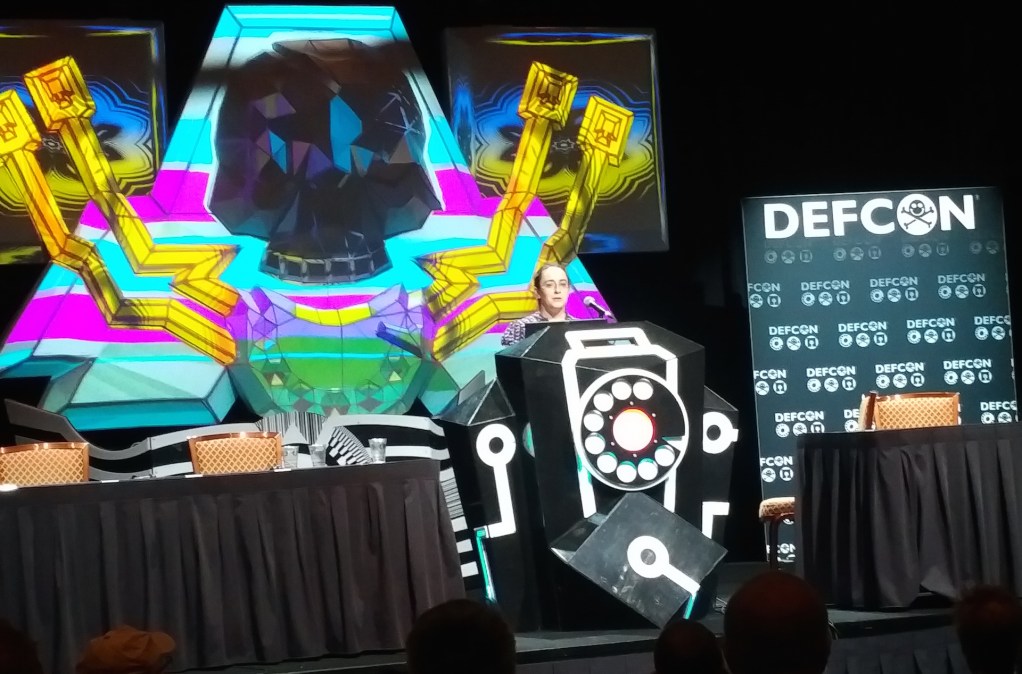

“There haven’t been a lot of studies that look at how effective are the safety measures that we use and trust,” Sarah Zatko, co-founder of the Cyber Independent Testing Lab, told a session at the DEF CON hacker convention Friday.

The gap, she said, helped create space for the relatively high proportion of “snake oil” products in the cybersecurity market, she said.

“In most other industries that sort of data [about how well different security measures worked relative to each other] would be pretty fundamental — something you could take for granted that it existed,” said Zatko, whose husband and co-founder is Peiter Zatko, who uses the hacker handle Mudge.

But when the Zatkos began trying to assemble a single numeric score from the various security factors they were measuring in software, they realized they had no basis to weigh the significance of the different measures against one another — because no one ever seemed to have rated their effectiveness.

The 100-plus technical measures Cyber ITL were looking at included things like:

- Address space layout randomization, or ASLR — a memory-protection process for computer operating systems that guards against buffer-overflow attacks by randomizing the location where system executables are loaded into memory.

- Data execution prevention, or DEP, which prevents certain memory sectors from being executed, protecting the operating system kernel from attack.

- Stack hardening, which helps protect against certain kinds of denial of service attacks.

“I had never realized what a weird blindspot that was until I needed that data for this effort,” Zatko said.

“It’s just sort of mind-blowing,” she said, adding that filling this gap was now the top short-term priority for Cyber ITL.

“The end goal is of course to make the software industry safer and easier for people to navigate,” she explained.

Using their own data from both static and dynamic software analysis, the husband-and-wife team will conduct “studies about how impactful are the different [security] elements that we’re looking at and how much should they affect the final score.”

That way, she said, when they did get around to publishing their security scores they would be “numbers we’re ready to get into a fight over … things that are really solid and that we really stand behind.”

“It will be a big deal,” she promised, explaining that there were many in the security field who shared their concern about the lack of quantitive metrics, which means it’s hard to show the value of a particular security control, technique or program.

“We’re not the only ones who are frustrated about the lack of quantification of impact for any of the [security measures] that we hope to make industry standards,” she said.

And for a very good reason: “I believe that this gap in the body of existing research is at least partially responsible for the success of snake oil salesmen in the cybersecurity industry, because for the stuff that really has value and substance and [works]; and for the snake oil — the same argument is being made: ‘I’m an expert and this works, trust me.'”

Without authoritative metrics, non-experts don’t have the data they need for a rational decision-making process and “So whichever one is better marketed and prettier … is the one they go for,” she said.

Zatko said that measuring the effectiveness of security was hard, and wouldn’t earn you any friends.

“It’s not …sexy, it’s not … exciting, and there are enough other problems [to work on], so people don’t do it.”